Real-Time Reality Editing: The Other Side of Censorship

Mandela effects in real time. Are they a form of censorship, or self-editing? You decide.

Popular Rationalism is free, but your paid subscription helps us make it great!

Real-Time Reality Editing and the Future of Free Speech

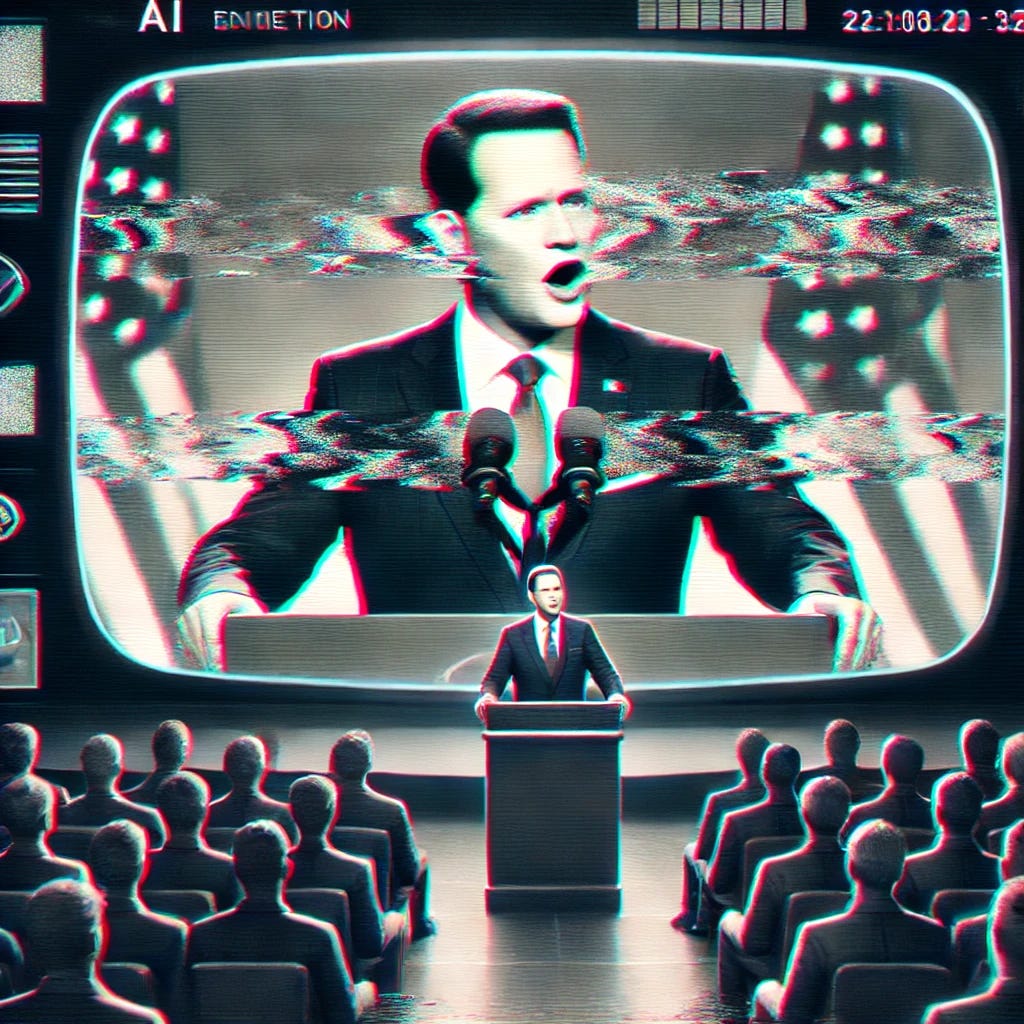

In today’s digital age, freedom of speech is often framed around traditional censorship—where voices are silenced and information is withheld. However, another subtle form of control is emerging: real-time reality editing. Powered by artificial intelligence, this new phenomenon doesn’t block information—it changes it, seamlessly altering the fabric of events and records as they happen. Unlike old-fashioned censorship, which protests or alternative platforms can counteract, real-time reality editing leaves no trace, raising profound concerns about its impact on free speech and the public’s ability to interface accurately with reality.

What is Real-Time Reality Editing?

At its core, real-time reality editing refers to using AI and machine learning to manipulate live or recorded content, adjusting how information is perceived after the fact. Instead of simply preventing access to content, this technology allows instantaneous modifications, often without the audience’s knowledge. The result? What you believe to represent reality accurately may be a carefully engineered version that serves a particular narrative.

A Free Speech Dilemma

The First Amendment guarantees the right to speak freely, but how does that apply in a world where speech can be altered in real-time? If a public figure's words or actions can be seamlessly modified after the fact, especially if right after the fact, do they still have control over their speech? And more importantly, how can the public trust that what they see, hear, or read is authentic? These questions highlight the complex intersection between technology, free speech, and the public’s right to know.

The rise of AI manipulation tools, like deep fakes, offers new possibilities for both creativity and deception. When used for parody, these technologies can enhance free expression by allowing creators to engage in satirical commentary. However, when used to deceive—altering one or two words in speeches, videos, or events without transparency—it threatens the very principles of free speech, blurring the lines between truth and fiction.

This is the crux of the real-time reality editing debate. It is crucial to determine whether what we see and hear reflects reality or a curated, AI-generated version of it. If this becomes normalized, the consequences for our ability to keep a grip on reality based on truth and the stability of social systems could be dire.

Traditional Censorship vs. Reality Editing

Traditional censorship has long been viewed as a direct affront to free speech, involving suppressing, removing, or blocking information deemed unacceptable or dangerous. From government crackdowns on dissenting voices to media blackouts in authoritarian regimes, this form of censorship operates openly, allowing citizens and organizations to challenge or push back against it. The fight for free speech, especially in a First Amendment context, often involves confronting these overt restrictions, where banned content is either denied publication or completely erased from public view.

Reality Editing, however, represents a more covert and insidious threat. Rather than deleting content, reality editing allows for subtly modifying facts and events. Using AI, videos, speeches, and even public records can be altered in ways that go unnoticed by the average viewer. Unlike traditional censorship, where missing information is evident, reality editing seamlessly replaces reality with a fabricated version. This form of control is harder to detect, as the altered content blends into the information landscape without a noticeable gap.

Examples of Traditional Censorship:

Government Suppression: Regimes blocking media coverage or jailing dissidents who speak against the state agenda.

Corporate Censorship: Social media platforms removing posts or accounts they consider violating terms of service, sometimes in service of the government.

Historical Erasure: Textbooks or Youtube videos being edited to omit uncomfortable truths about a nation’s past.

Reality Editing in Action

One of the most famous recent examples of reality editing is the manipulation of Alicia Keys’ Super Bowl performance. Her missed note during the live performance was removed from the official NFL video, making it appear as though her performance was flawless. This alteration was not labeled or disclosed, leaving future viewers with a fabricated version.

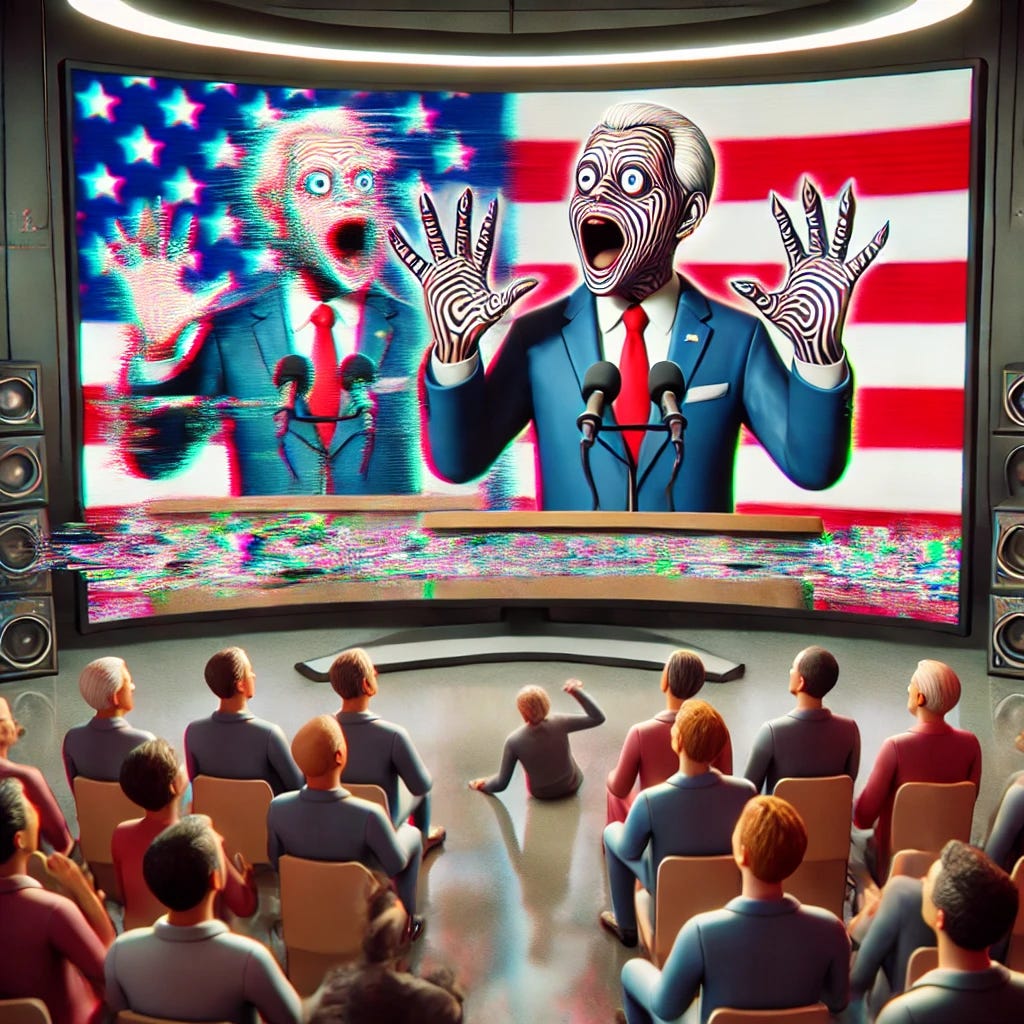

This form of editing becomes even more alarming when applied to political or historical content. Imagine election campaign videos being subtly edited to mislead viewers about a candidate’s message or history. The problem is compounded by AI-driven tools that can convincingly alter a person’s voice, making them appear to say things they never did. In the case of deepfakes, for instance, an AI-generated video of Kamala Harris was shared online by Elon Musk without a disclaimer that it was a parody, allegedly leading to widespread confusion (PetaPixel). If people were confused by that video, it raises the question of the intelligence of the electorate.

The key distinction between traditional censorship and reality editing is transparency. While traditional censorship can often be recognized and countered, reality editing is far more covert, presenting a version of events that may go unquestioned, especially if no one recalls or records the original reality. The danger lies in its subtlety—the absence of an obvious breach of free speech makes it more difficult to challenge, creating an environment where public perception can be manipulated without resistance.

While traditional censorship confronts free speech directly, reality editing operates in the shadows, reshaping information in real-time. It’s not about silencing voices but about distorting them, creating a new frontier for controlling narratives.

AI in Reality Manipulation: A First Amendment Perspective

The burgeoning capability of artificial intelligence (AI) to edit reality in real-time poses unique challenges to the principles of the First Amendment. As AI technologies like deep fakes become more sophisticated, they blur the lines between reality and fabrication, raising significant free speech issues. This manipulation actively reshapes public perception, creating a new era where the right to accurate information is under threat.

Legal and Ethical Dilemmas: The use of AI to manipulate media content tests the boundaries of the First Amendment, which protects free speech but also implies a marketplace of ideas where truth can prevail. When AI alters what a person appears to say or do, it interferes with that person's free speech rights and the public's right to accurate information. This raises questions about how such technologies should be regulated without infringing on free expression.

Political and Public Implications: Consider the implications of AI-manipulated content during elections or public emergencies, where the authenticity of information can significantly influence public response and democratic processes. Kamala Harris's altered video is a stark example of how deep fakes could be used to mislead voters or slander public officials, complicating the already complex dialogue around political speech and truth.

Responsibilities and Safeguards: Tech companies and media outlets are responsible for detecting and disclosing AI manipulations. Some have proposed legislative responses that would seek to strike a balance between the need to protect the public from deceptive practices while respecting the essence of free speech. This includes laws like those recently passed in California to ban deepfakes in political ads, aiming to protect the electoral process while navigating First Amendment rights. That will be a tricky balance indeed.

Is AI-Generated Deep Fake Speech “Coerced Speech”?

Using AI to generate content that appears to be from a specific individual can raise significant First Amendment concerns, specifically regarding coerced speech. Coerced speech occurs when individuals are compelled to express ideas or statements against their will, which the First Amendment generally protects against. When AI creates content that puts words in someone's mouth—literally in the case of deepfakes—it can be argued that this technology coerces speech by attributing statements to individuals that they did not make. This could be seen as a violation of their right not to speak or to control their own speech.

The matter would seem to hinge entirely on whether the video was designed to intentionally mislead or defraud the public. The irony is that much of the political speech made by human politicians in person might qualify as attempts to intentionally mislead and defraud the public.

Nevertheless, the legal implications are still evolving, and courts and legislators will have to grapple with balancing the protection of individual rights against the freedoms that allow for technological innovation. Debates often focus on whether these AI manipulations should be considered harmful and misleading enough to warrant restrictions or if such regulations would infringe on the creators' freedom of expression. Thus, while AI's capabilities grow, so does the urgency for legal frameworks and social responsibilities that address these nuanced First Amendment issues without stifling innovation or unduly infringing on free speech rights.

Ethical Dilemmas

Distortion of Truth

AI's capability to seamlessly alter media blurs the lines between fact and fabrication. This distortion complicates the public's ability to discern truth, potentially altering perceptions and memories of historical and current events. The ethical question arises: How much alteration is too much before the truth is lost?

Trust Erosion

AI-manipulated media can significantly undermine trust in institutions, the media, and archival records. If the authenticity of content is continuously in question, it can lead to a generalized skepticism that erodes trust in key social contracts. Some would say “well isn't that their goal”? And I say yes, which is why this article, in particular, does not take a hard position one way or the other on the use of deep fakes. It would seem that their use in parody is protected speech, and that laws such as the one in California will be struck down.

Legal and Ethical Concerns

Laws like those introduced by Governor Gavin Newsom, which aim to ban deepfakes in election campaigns, highlight the efforts to curtail the misuse of AI in altering public perception. These laws raise ethical debates about balancing regulation with freedom of expression, questioning where to draw the line in legal terms.

Parody vs. Manipulation

The distinction between parody, protected under free speech laws, and harmful manipulation, which can cause real-world damage, is crucial yet, for some (apparently), hard to detect. Legal systems struggle with where to set boundaries, often relying on context and intent to differentiate satire from malicious falsehood. It seems likely that the closer the satire is to the truth, the more likely it is to be missed as satire and perceived as a maliciously false publication. A new form of uncanny valley?

These dilemmas illustrate the complexities of integrating advanced AI technologies into society. Addressing them requires careful consideration of both technological capabilities and the fundamental values of truth and trust that hold societies together.

Regulation of AI Media

Governments need to implement clear but careful regulations to manage the ethical challenges posed by AI. Recent laws, such as Gavin Newsom’s ban on AI-generated deep fakes in political ads, represent the first steps toward addressing the misuse of AI. Many (including us) think that because the law was founded in reaction to parody, it will be challenged and dismissed as unconstitutional. Any regulations must be crafted to balance the protection of free speech while ensuring that AI is not used to mislead the public.

Transparency in AI-Generated Content

Requiring clear labeling of AI-manipulated media is crucial to prevent public deception. Content platforms must take responsibility by adopting transparency standards. These could include mandatory disclosures whenever AI is used to alter video, audio, or text, making it clear to viewers what is real and what has been digitally modified.

Public Education

One of the most effective defenses against AI manipulation is public awareness. Enhancing digital literacy through education programs that teach individuals how to identify altered content is critical. By equipping people with the tools to question and analyze what they see online, the spread of misinformation can be significantly curbed.

Technological Countermeasures

AI detection systems are being developed to identify manipulations in real-time. These tools can analyze videos, images, and audio for signs of alteration, helping content platforms and users alike verify the authenticity of what they’re viewing. Investing in these technologies and promoting their widespread use will be key to maintaining the integrity of information in the digital era.

Related Articles

CBC Responds to Dr. Lyons-Weiler, Dr. Lyons-Weiler Responds Back – jameslyonsweiler.com

https://timothywiney.substack.com/p/are-we-transitioning-from-a-post

Only the most mentally challenged Democrat could have mistaken the Kamala 'Shallow Fake' video with her own words. Besides, Kamala is already a parody of herself!