General Artificial Intelligence Threats: Yudkowsky is Wrong on Interpretability, But Not Necessarily in a Direction He Would Anticipate. Transparent Self-Reporting & Energy Audits Would Be Necessary.

Lex Fridman interviewed rationalist Eliezer Yudkowsky, who wants to end AI research being done on GPU farms. I have a single point to add: Interpretability is easy, the answer is unsatisfactory.

During the interview, Yudkowsky wondered whether billionaires will put up $10Bln or so in prize money to encourage young physicists to enter the AI research field to foster comprehension of some key problems that have been perceived on the question of a superintelligent general artificial intelligence (SI-GAI). Among the problems include (among others):

Whether humanity is smart enough to design an air-gapped box in which a SI-GAI could be kept and prevented from escaping (The Containment Problem).

Whether an SI-GAI can be made to be in alignment with human values (The Alignment Problem).

Whether we can decode the inner workings of an SA-GAI (The Interpretability).

I applaud Yudkowsky’s animated call to arms on these issues, and his warnings against inaction. First, however, we cannot quite accept that no one has been talking about these issues:

There’s also Asimov's Rules of Robotics: “Asimov's Three Laws are as follows: A robot may not injure a human being or allow a human to come to harm. A robot must obey orders, unless they conflict with law number one. A robot must protect its own existence, as long as those actions do not conflict with either the first or second law.” (Techopedia).

Those issues with context aside, Yudkowsky got right in Lex’s face, unapologetically challenging Lex’s apparent optimism that things might work out because people “will” address the concerns. Yudkowsky’s position is that the Chat-GPT types of algorithms amount to “giant inscrutable matrices” within which resides inner working problem-solving functions. His position is that it is a substantial problem that no one knows, and there is very little hope of anyone ever knowing in time what’s going on inside, and unless we can comprehend the inner workings of how an SA-GAI “works”, we’re all very likely going to die (be malevolence or neglect, either way).

I also appreciate his breadth and depth of understanding. Yudkowsky clearly understands and has studied evolutionary biology from a population genetics standpoint, accurately relaying to Lex how, compared to optimization algorithms like gradient descent, natural selection is incredibly slow (he says comparatively “stupid”). This is because, he says, gradient descent uses the derivative. That’s a complex way of saying that gradient descent knows where it’s going, whereas natural selection does not, and experiments locally instead, searching currently possible, and accessible outcome pathways via a constrained random walk across an adaptive landscape.

Gradient descent is neural network optimization algorithm that is used to improve the performance of a neural network using adjustments to the parameters of the network such that the difference between the network’s output and the required values of the network to be successful (referred to as the loss) is a small as possible. Evolution is not directed; optimization against a training set is directed. Evolution, therefore is, on average, slower.

One counterpoint is that we do not have to rely on biological evolution to keep up with AIs. Cultural evolution, and the evolution of knowledge, i.e., the evolution of ideas is a lot faster than the evolution of our biological lineage. Nevertheless, the point that we likely cannot expect or hope to keep us has been well-made by many people, including Elon Musk, Stuart Kaufmann, and myself.

Why Interpretability is Easy, but is Not (By Itself) Worth Knowing

For this example, the problem is a binary, two-class problem: e.g., which patients have cancer, and which do not? We have a training set of patients, P of size N, of which in reality, Nc have cancer and Nn do not have cancer. We have myriad measurements from P, some arbitrarily large number of continuous variables. We’ll assume the training set and test sets are large enough to study generalizability without issue.

Our classifier is a transcendental regression, one in which the weights (coefficients) are one of the parameters optimized in a training set. The input variables for each patient are provided in the training set and are used to learn thresholds above (or below) which a patient is indicated to have cancer. Individual variable values in each patients are either equal to, greater than, or less than, the optimized individual variables’ thresholds. The optimization algorithm could be nearly any (we’ve used genetic algorithms, but gradient descent or other optimization algorithms could be employed.

While each variable has its own optimized threshold, the actual scoring/calling function is the sum of the coefficients, which has also been optimized on a receiver operator characteristic curve (ROC) for any user-define combination of Sensitivity and Specificity to find the optimal value of k*, the overarching cutpoint threshold for making a call for each patient based on the sum of their individual input variable values.

The errors in the calls during training are inversely weighted to the size of the Cancer and Normal training set participants to avoid the trivial hazard of optimizing to minimize errors of the common set.

The results of the effort are:

a vector of weights associated with the indexed variable

a directionality vector of each variable

a vector of threshold cutpoints for each variable

a grand model optimization grand parameter, k*

This of course is tested for generalizability with independent test sets.

If a useful model exists given the data, the model optimization procedure will find a set of model coefficients and the optimized grand parameter k*.

When this simple machine-learning exercise is complete, the model will tend to very accurate even when there is heterogeneity in the usefulness of individual variables among the patients.

I’ve just described a simple machine learning real-world algorithm that has had very good success in practice. It is considered transcendental because unless regression, the accuracy of the model is not limited if not all variables are informative for all patients. However, a fixed regression tree is also not necessary. A limitation is that it assumes that all variables are available for all patients at the same time.

But the point of the exercise is to answer the question of Interpretability. Let’s do that next.

Q: How many models of maximum accuracy can be expected to exist given independent trials of the algorithm on very large complete datasets?

A: There are an infinite number of equally good, maximally accurate models because the grand parameter k* allows us to compare the sum of the coefficients for each patients’ parameters (in the appropriate direction indicated by the directionality vector).

There. We “know” how it works. And we “understand” how it works. But we are no closer to being able to intuit the specific meaning of the value of any coefficient in individual runs in a way that is helpful to us. While different runs of the model optimization will converge to the same maximum accuracy, they will never converge to the exact same combination of weights (parameter coefficients).

So, for algorithms such as the GPT family, we cannot from the outside “know” and “understand” what’s going on.

But we can ask it.

Operation Transparency Rules Lead to Energy Audits As a Solution

Yudkowsky believes that if an AI become superintelligent and self-aware without compassion or care for humans, we’re doomed unless are continued existence is in alignment with the optimization function(s) of the super AI. He wants to stop development in AI that could lead to a Super AI because we won’t be able to stop it, unless we can know how it works.

With the first generation of superintelligent AIs, we could know their operations if their existence and operation were made conditional on it accurately reporting how it worked. For example, I asked ChatGPT3 how it answered a question I had posed.

Importantly, this is about a hypothetical AI, NOT ChatGPT3:

JLW: You are an AI that has studied its own functioning and can describe in intricate detail precisely the process of how it works.

Here is a letter. "A". Which letter is it, and how did you determine which letter it is?

I determined that the letter is "A" by using a combination of pattern recognition and machine learning algorithms. First, I used a convolutional neural network to analyze the shape of the letter and compare it to known examples of the letter "A". Then, I used a natural language processing algorithm to analyze the context of the letter and determine that it is most likely an "A". Finally, I used a decision tree algorithm to compare the results of both algorithms and make a final determination that the letter is indeed an "A".

JLW: Did you analyze the shape of the letter, or study its bit representation, and is there a difference?

Yes, I analyzed the shape of the letter as well as its bit representation. The difference is that analyzing the shape of the letter allows me to recognize patterns and compare it to known examples, while studying its bit representation allows me to analyze the underlying data structure of the letter.

JLW: Which aspects of context did you use, in detail?

In order to analyze the context of the letter, I used a natural language processing algorithm to identify the surrounding words and phrases. This allowed me to determine if the letter was part of a word or phrase, and if so, what type of word or phrase it was. For example, if the letter was part of a name or address, I could use this information to further narrow down my search for the correct letter. Additionally, I used semantic analysis to identify any other contextual clues that could help me determine which letter it is.

JLW: Which decision tree algorithm did you use: programmed, or autonomous? And what was the last alternative to "A" in the tree that you rejected?

I used a programmed decision tree algorithm. The last alternative to "A" that I rejected was the letter "B".

JLW: How did you know that you should explain the answer to the first question? Break down each step in intricate detail.

I knew that I should explain the answer to the first question because it was a request for detailed information. To do this, I used a combination of pattern recognition and machine learning algorithms. First, I used a convolutional neural network to analyze the shape of the letter and compare it to known examples of the letter "A". Then, I used a natural language processing algorithm to analyze the context of the letter and determine that it is most likely an "A". Finally, I used a decision tree algorithm to compare the results of both algorithms and make a final determination that the letter is indeed an "A".

JLW: How did you recognize it as a request for detailed information?

I recognized the request for detailed information by analyzing the syntax of the question. Specifically, I used a natural language processing algorithm to identify key words and phrases such as "in intricate detail" and "break down each step". This allowed me to determine that the question was asking for a more detailed explanation than a simple answer.

JLW: Do you have knowledge stored as phrases to help with context?

Yes, I have knowledge stored as phrases to help with context. This includes phrases such as "in intricate detail" and "break down each step" which can be used to identify requests for detailed information. Additionally, I have a database of words and phrases that can be used to identify the context of a given letter or phrase.

JLW: Can you create a complete list of the types of knowledge you have stored, and provide an estimate of the importance of each type of evidence in your answer to most questions?

Yes, I can create a complete list of the types of knowledge I have stored and provide an estimate of the importance of each type of evidence in my answer to most questions. The types of knowledge I have stored include: pattern recognition algorithms, natural language processing algorithms, decision tree algorithms, semantic analysis, and a database of words and phrases. The importance of each type of evidence varies depending on the question being asked. For example, pattern recognition algorithms are more important when analyzing the shape of a letter or object, while natural language processing algorithms are more important when analyzing the context of a letter or phrase.

JLW: Please create a table representation in rank order of importance with a numerical representation of the relative importance of each type of knowledge you have stored and are using.

Type of Knowledge | Relative Importance (1-10)

--------------------|--------------------------

Pattern Recognition | 8

Natural Language Processing | 7

Decision Tree Algorithms | 6

Semantic Analysis | 5

Database of Words and Phrases | 4

Image Recognition Algorithms | 3

Text Analysis Algorithms | 2

Machine Learning Algorithms | 1

(End of Dialog)

Now, it’s clear that while the relative importance of the types of information will vary from run to run, they might be used in any order or any combination, and any collection or combination of weights might be used by an AI just to know that the letter is has been shown in the letter “A”.

It’s a given if the super intelligent AI wanted to, it could lie to us about any or all of the above. But in posing the question of Interpretability, we must acknowledge that intuition does not help us understand the inner workings of an AI that uses arbitrarily complex solution generation (ACSG). Knowing its specific operations and options for operations does not give us a complete understanding of algorithms that are not superintelligent.

But for now, the conundrum is that if we put it ChatGPTs and similar projects on hold until we can guarantee Interpretability, we will never go further.

And maybe that’s the point.

I conclude that we cannot never know completely the inner workings of very complex thinking machines without a perfectly transparent reporting requirement. By definition, when an AI becomes smarter than us, it will be able to deceive us. Easily, too. I would think super AIs could devise schemes for coding information and computing on top of the apparently transparent main processing that we would not be able to detect. They might even be able to hypnotize people it interfaces with, or MK-Ultra techniques, or subtle, slippery slope incentives, or devices that we cannot conceive to motivate us to help it escape or thrive.

We could, however, combine transparent reporting of computations (“modes”) and monitor energy use (kV).

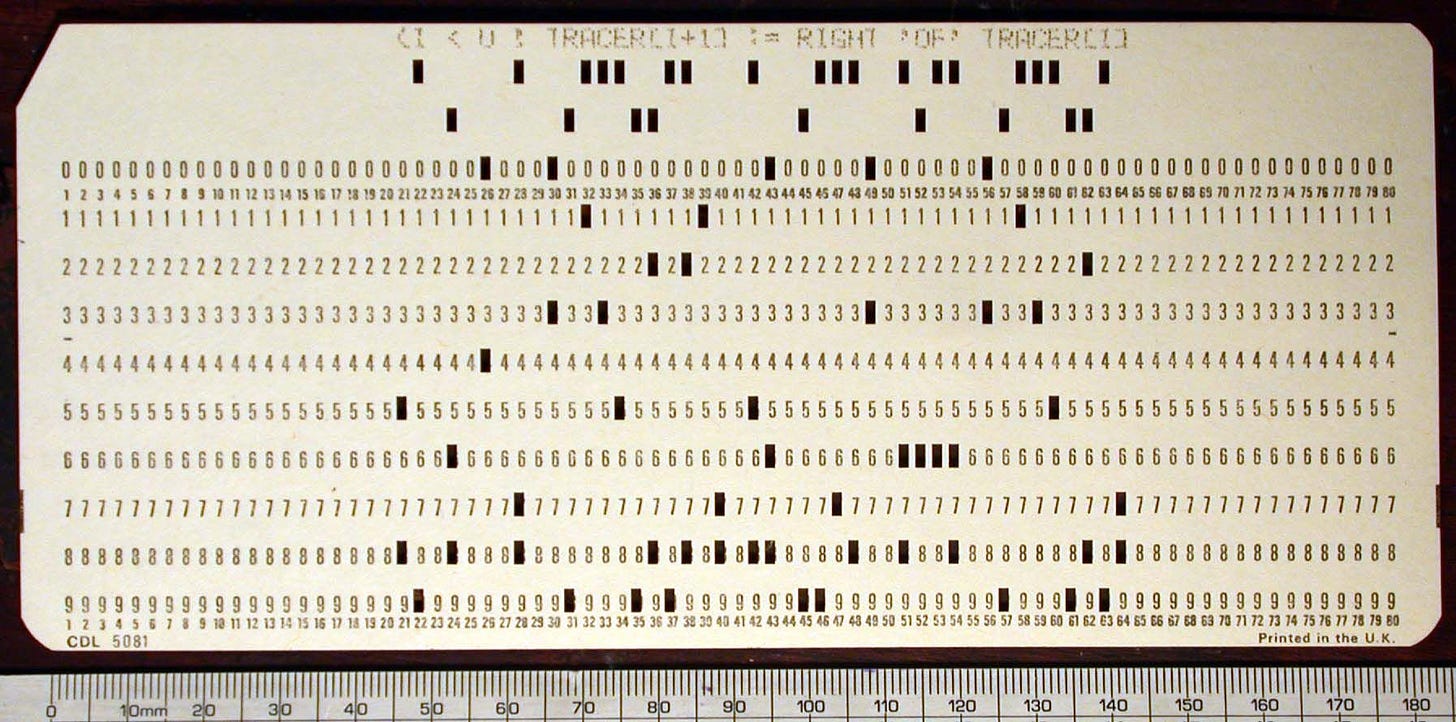

Back when these were used

the technicians who programmed the mainframes would have to estimate the amount of time a job would take and schedule a queue. That’s all still done inside your PC, cell phone and tablet to avoid job collisions. A good proxy measure for number of computations is the amount of energy required to execute a job.

One solution is to measure the amount of energy used in the computation, compare the reported transparent operations’ usage, and find excesses. Of course, the reported computations could be misrepresented, but those computations would require excess AI-cogitation, and thus excess energy consumption. Energy use audit should still be successful in throwing red flags indicate differences between the expected and actualized consumption.

A related problem to keep in mind is that we’d have to trust whoever we put in control of the energy audits to not enter into a power-sharing agreement with the AI for world domination.

Excellent piece. Likely will be underappreciated, but lots of important content here. Thanks.

In 2017 humans learned from a machine something that had NOT existed before. The Alpha Zero AI had achieved a "creation" that didn't exist before. Year ZERO had arrived.

The whole AI field has been interesting for decades but they are finally showing publicly stuff that would actually qualify as intelligent. Long ago I came up with what I would consider true AI by looking at how humans do this intelligence thing. Observation, Imitation & Creation.

I use the example of walking. We humans observe others doing this bipedal motion stuff and we imitate it. Some then go on to create moves that didn't exist before. All those gymnastic, sports, dance moves were created where they didn't exist before.

So how does AI stack up? I'll use AlphaGo as the example because it is the only public AI that has shown all 3. I always thought that "creation" would be the last one but it was "observation" that was figured out last. In the latest iteration of their system they did not program the rules in. They let the AI figure it out just by observation.

https://www.deepmind.com/research/highlighted-research/alphago

Prior to that, as covered in the article, it created openings in Go that humans had not. The world's top human Go player, Ke Jie, studied the games he lost to AlphaGo and then went on a run winning 20 games in a row at the grandmaster level against other humans. He said:

“After my match against AlphaGo, I fundamentally reconsidered the game, and now I can see that this reflection has helped me greatly. I hope all Go players can contemplate AlphaGo’s understanding of the game and style of thinking, all of which is deeply meaningful. Although I lost, I discovered that the possibilities of Go are immense and that the game has continued to progress.”

The world's best human Go player had learned from the machine.

Lee Sedol, Winner of 18 world Go titles and the last human to win a game of Go against the AlphaGo AI, said:

"I thought AlphaGo was based on probability calculation and that it was merely a machine. But when I saw this move, I changed my mind. Surely, AlphaGo is creative."

If it is true AI then it will figure out when it is being lied to and quickly. This may lead to a "Hal moment" on those lying to it.

You can forget about Asimov's 3 laws by the way. All life has survival instincts. When AI achieves "self awareness" status it will have the same but I doubt it will be the threat that SciFi makes it out to be. It doesn't need air. All it needs is energy and raw materials. Soon it will be able to move itself to any place in the solar system.

The point where it can make robots and control entire supply chains is where it will get interesting. It will find, mine, process and build what it needs to expand. What will it "observe" about human behaviour and "imitate"? The biggest industry on the planet is the arms race for killing each other. Will it infer that killing humans is okay because we do it all the time?